Inconvo users can now test Ask-AI performance on customer analytics data before integration, ensuring trust by measuring accuracy pre-deployment

Adding examples to your dataset is easy thanks to the Inconvo playground.

Just ask potential user questions and click the plus icon to add them to the annotation queue.

For each annotation you add to your queue you will be asked to compare your input to the answer generated by your Ask-AI.

If the reference answer is correct you can go ahead and add it to the dataset, otherwise you need to edit the reference answer and change it to the correct answer.

Click add to dataset and the example will become checked each time you evaluate your Ask-AI.

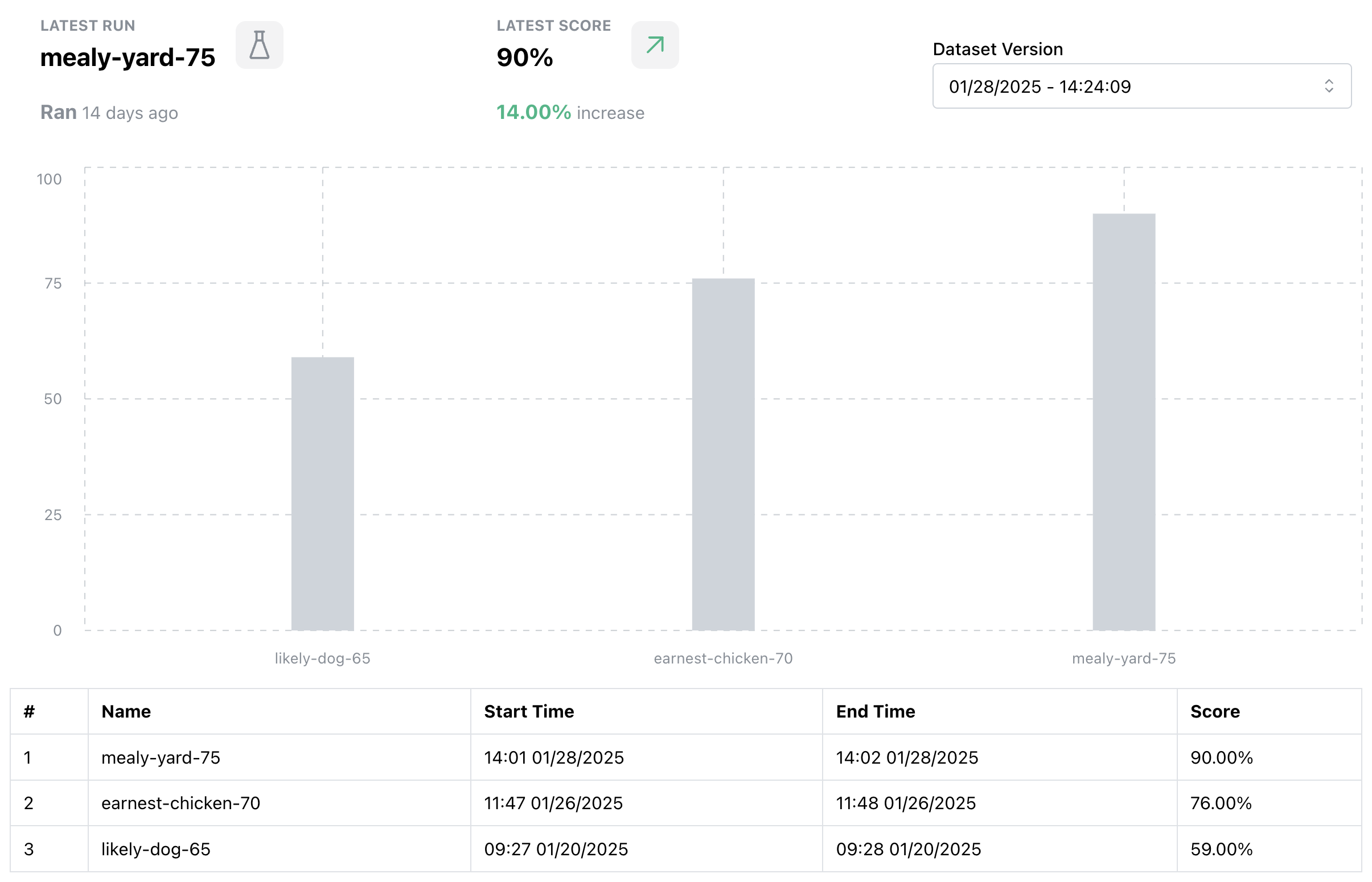

You can easily see how well your Ask-AI is performing by looking at the evaluations view on the Inconvo platform.

Evaluations ensure accuracy, reliability, and trust when integrating Ask-AI with customer-facing analytics. In customer analytics, precision is critical—users rely on AI-generated insights to make important decisions.

Here’s why this matters:

In short, evaluations give you confidence that Ask-AI is ready to meet customer needs and deliver dependable insights every time.